1、引言

近些年随着“云”逐渐成为主流,云产商通过按需计量收费(如 CPU 数、容器数、存储等)的方式逐步成为业界的通用标准。众安作为互联网保险公司,公司应用全面拥抱了云原生基础设施,基于这样的背景,研发团队在架构设计层面,需要综合考量研发效率、性能、资源成本等多个因数之间的平衡。本文所切入点是从 JVM 这个点开始的,正如 OpenJ9 所宣传的特性那样,其占用内存空间小、云环境适配性强,让笔者所在团队跃跃欲试。本文通过介绍 OpenJ9、测试 OpenJ9 在 MEM 和 CPU 方面的响应、整理线上切换思考和问题、汇总线上切换收益,形成了一个完整的调研、实践及反馈闭环。整理出本文,献给大家参考。

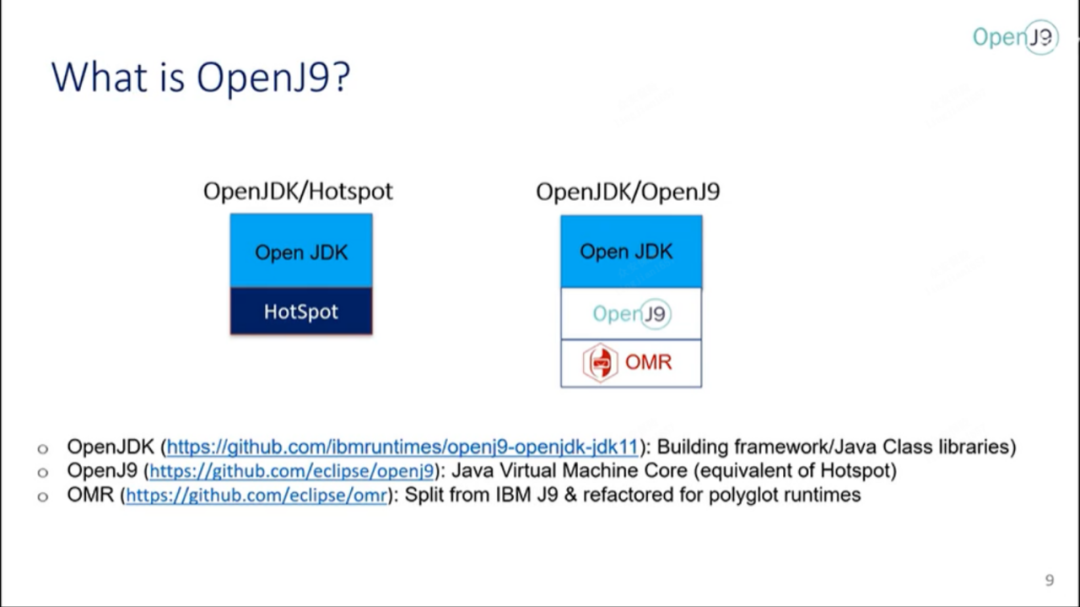

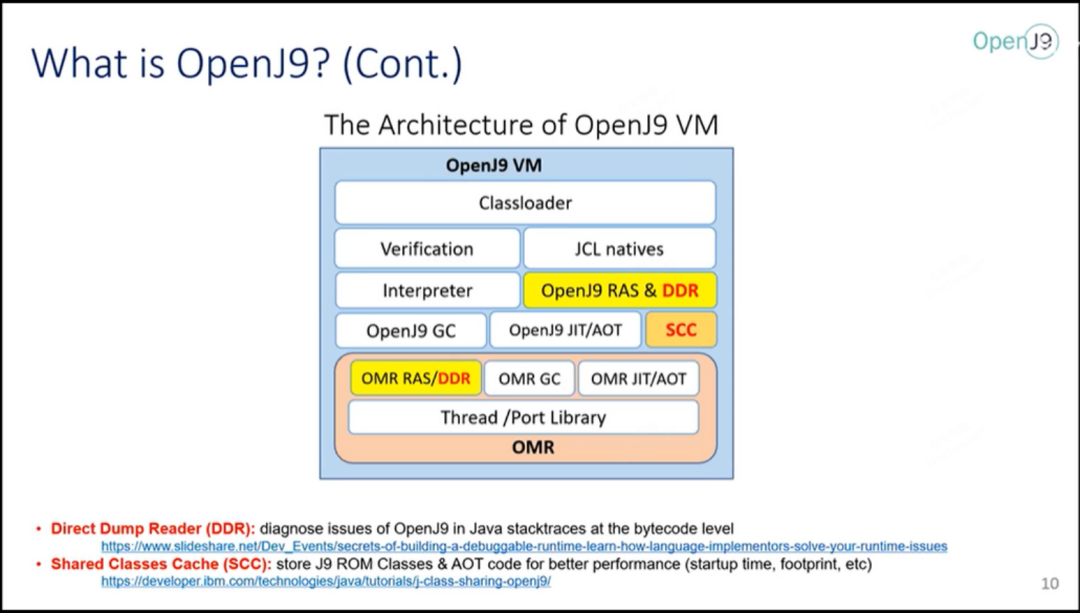

2、OpenJ9 是什么?

OpenJ9 和 Hotspot 一样,是个虚拟机,属于 JDK designer & implementer 那类。

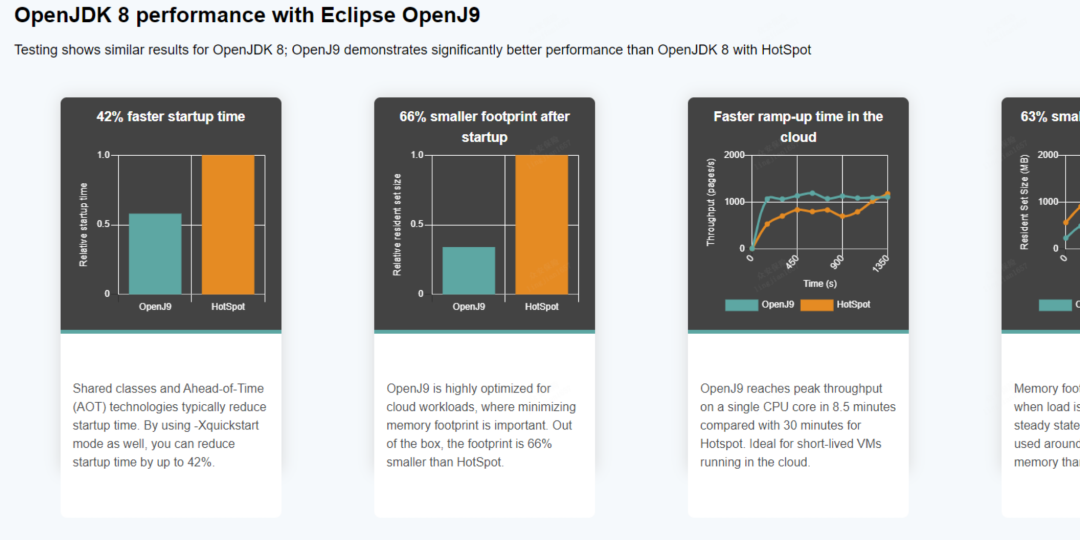

3、OpenJ9 特点、优势

根据官网文档说明,以 OpenJDK8 和 OpenJ9 比较说明其应用层面的特点、优势:

42% faster startup time,启动时间快 42% 66% smaller footprint after startup,启动后占用内存减少 66%

Faster ramp-up time in the cloud,云端环境快速提升吞吐量

63% smaller footprint during load,高负载时减少 63%的占用空间

根据 cheng jin(OpenJ9 VM Software Developer)介绍,DDR 和 SCC 是其不同于 HotSpot 的 2 个特点。

DDR:字节码、指令查看工具,不仅限于这个点

SCC:共享缓存,内存映射文件,faster startup smaller footprint

4、与 HotSpot 的一些差异

官方文档给出了 HotSpot 和 OpenJ9 的一些差异比较,详见https://www.eclipse.org/openj9/docs/openj9_newuser/

5、以众安健康险某应用为例实战 OpenJ9

根据 OpenJ9 的特性,结合笔者所在团队典型的具备该特性型应用来做些调研尝试。后续给出的验证材料会做必要的简化缩略,但符合整体的实践结论,没有特殊说明的情况下,后续所有的实践验证都是搭载的 Jdk8 版本。

5.1、启动时间

前面 5 次编译后面 5 次重启的方式验证。

HotSpot:

2022-11-21 10:23:28,681 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 18.172 seconds (JVM running for 26.026)

2022-11-21 10:34:18,089 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 20.496 seconds (JVM running for 28.51)

2022-11-21 11:08:34,687 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 21.057 seconds (JVM running for 29.406)

2022-11-21 11:14:14,482 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 19.026 seconds (JVM running for 26.751)

2022-11-21 11:21:18,403 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 25.372 seconds (JVM running for 34.543)

2022-11-21 11:25:38,611 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 17.042 seconds (JVM running for 24.267)

2022-11-21 11:32:53,958 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 19.364 seconds (JVM running for 27.723)

2022-11-21 11:37:05,300 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 18.859 seconds (JVM running for 26.526)

2022-11-21 11:41:44,996 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 20.337 seconds (JVM running for 28.833)

2022-11-21 11:46:50,933 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 19.631 seconds (JVM running for 28.274)

未开启 -Xquickstart 的 OpenJ9:

2022-11-21 11:10:24,612 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 24.462 seconds (JVM running for 30.382)

2022-11-21 11:15:34,476 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 22.395 seconds (JVM running for 28.111)

2022-11-21 11:23:45,262 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 22.468 seconds (JVM running for 27.78)

2022-11-21 11:30:35,146 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 24.835 seconds (JVM running for 31.284)

2022-11-21 11:36:08,676 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 26.649 seconds (JVM running for 32.623)

2022-11-21 11:38:48,876 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 34.609 seconds (JVM running for 41.84)

2022-11-21 11:42:27,940 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 25.345 seconds (JVM running for 31.008)

2022-11-21 11:44:31,370 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 24.534 seconds (JVM running for 30.321)

2022-11-21 11:47:19,612 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 32.299 seconds (JVM running for 39.358)

2022-11-21 11:49:20,883 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 24.551 seconds (JVM running for 30.465)

开启 -Xquickstart 的 OpenJ9:

2022-11-21 13:49:59,075 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 24.209 seconds (JVM running for 30.148)

2022-11-21 13:52:58,821 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 25.16 seconds (JVM running for 30.767)

2022-11-21 13:54:59,620 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 21.16 seconds (JVM running for 25.962)

2022-11-21 15:23:58,430 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 20.465 seconds (JVM running for 25.154)

2022-11-21 15:43:21,880 [main] INFO [StartupInfoLogger.java:61] [trace=,span=] - Started Application in 23.326 seconds (JVM running for 28.349)

结论:OpenJ9 并未比 HotSpot 加快启动时间,在有限的测试次数下看到的还是变慢了。进一步查资料,此场景应该是需要在开启 SCC+ 制作 Docker 缓存共享卷的情况下才能验证出来。

目前相关平台部门在排期支持此类自定义的功能上线,后续可以结合再次验证核实 OpenJ9 官网结论。

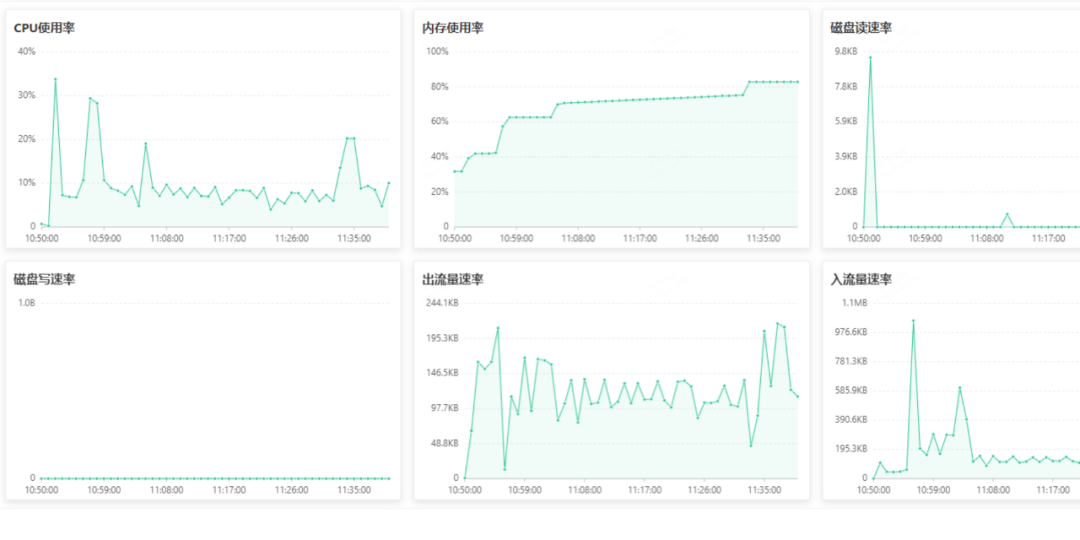

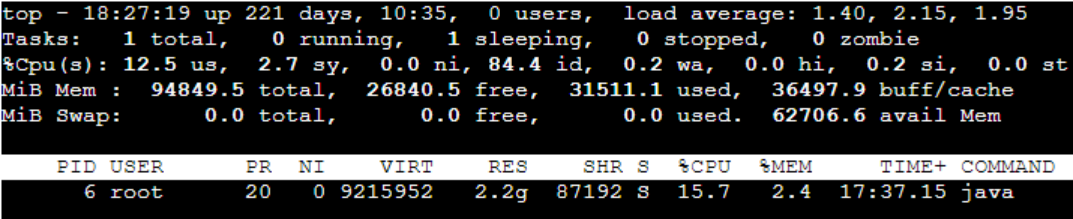

5.2、内存监控

测试方案,主要从 JVM 和 POD 两个层面检查内存变化,调研负载 1W 人【常规数据规模】、5W 人【目前已知的峰值】和 10W 人【假设未来可能的峰值】时 HotSpot 和 OpenJ9 内存的变化。

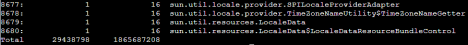

5.2.1、JVM

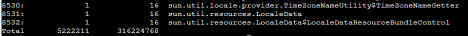

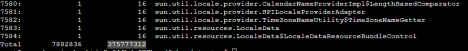

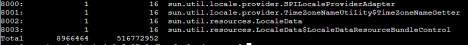

HotSpot 使用 jmap -heap id 可查看堆内存变化,但 OpenJ9 不支持 jmap -heap id,所以两者得折中一下,通过 -histo,查看对象的数量和占用堆内存大小。

静态验证:

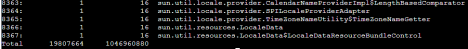

HotSpot 效果如下:

初始未负载时占用堆内存大小 278M 左右。

OpenJ9 效果如下:

初始未负载时占用堆内存大小 98M 左右。

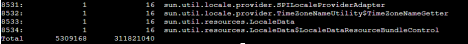

动态验证:

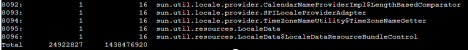

HotSpot,1W 人的场景下:

堆内存大小使用上升到 300 多~400 多 M,峰值到了 600 多 M。和监控平台上的堆内存大小使用基本上匹配。

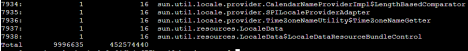

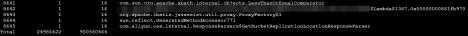

OpenJ9,1W 人的场景下:

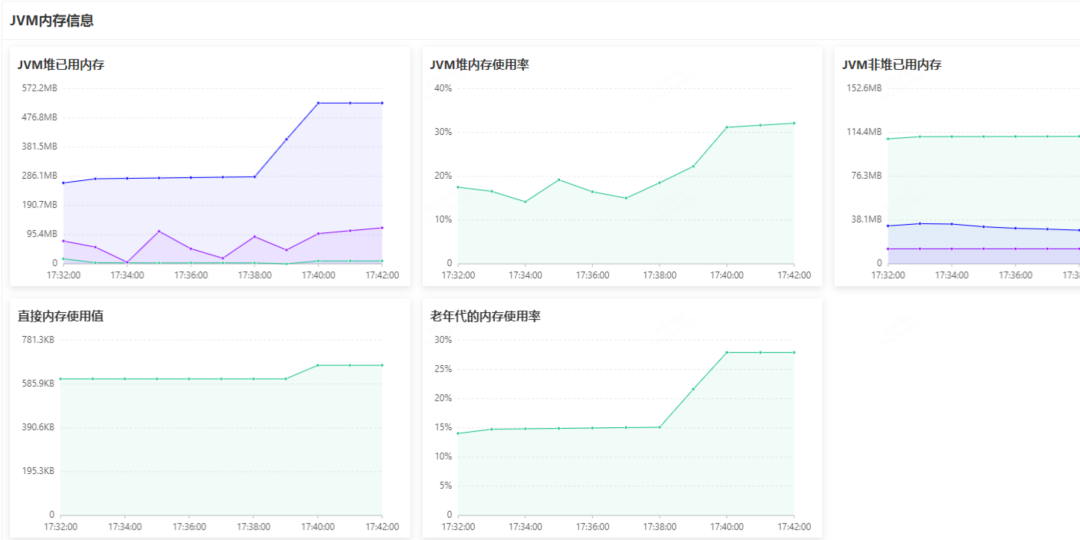

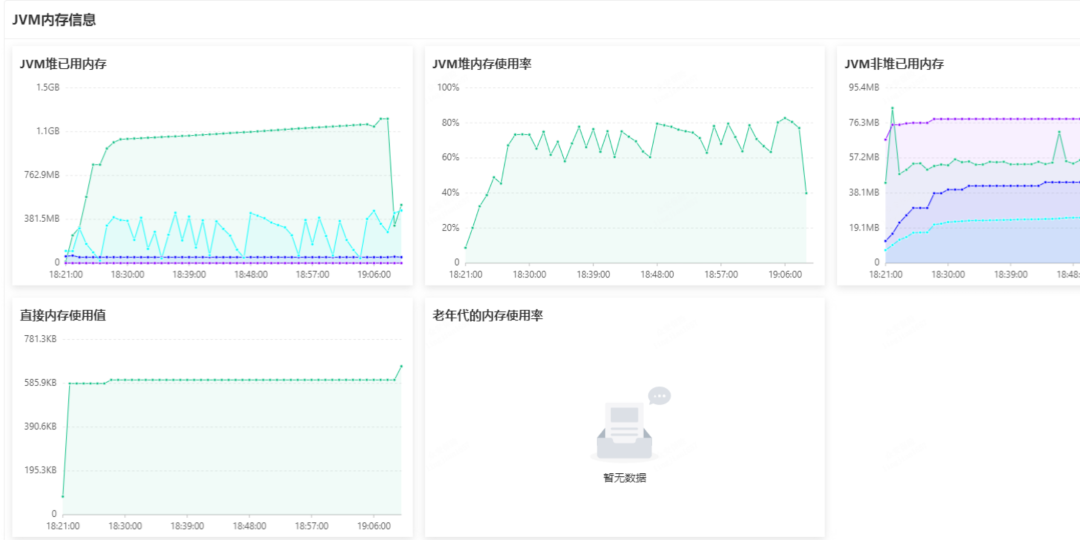

堆内存大小使用上升到 300 多~400 多 M,偶尔会跌回到 100 多 M,峰值到了 400 多 M。相关监控平台截图如下:

1 万人的结论,堆内存看起来也会快速上升,但是峰值 OpenJ9 确实低于 HotSpot,同时性能上也从 8 分钟(HotSpot)涨到了 10 分钟(OpenJ9)。内存占用少了,但性能其实降低了。

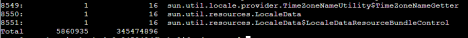

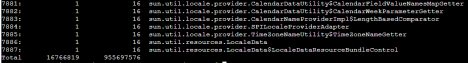

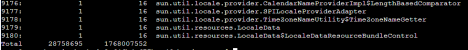

HotSpot,5W 人的场景下:

堆内存大小使用上升迅速爬升,稳定在 900 多 M~1G 左右,峰值到了 1.6G 左右,中间发生了 2 次 FULLGC。

OpenJ9,5W 人的场景下:

堆内存大小使用上升迅速爬升,不同于 HotSpot,堆内存使用呈锯齿状,峰值到了 1.2G 左右,中间发生了 4 次 FULLGC。图片耗时从 40 分钟到了 50 分钟,有一定的性能损耗。额外验证了未开启 SCC 的情况下,5W 人峰值到了 1.4G 左右。

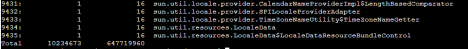

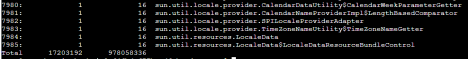

HotSpot,10W 人的场景下:

堆内存大小使用上升迅速爬升,稳定在 1.2G 左右,峰值到了 2G 左右,中间发生了数十次 FULLGC。最终因为堆内存打满无法再分配内存导致任务失败进入重试。

5.2.2、POD

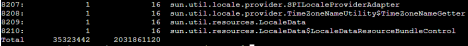

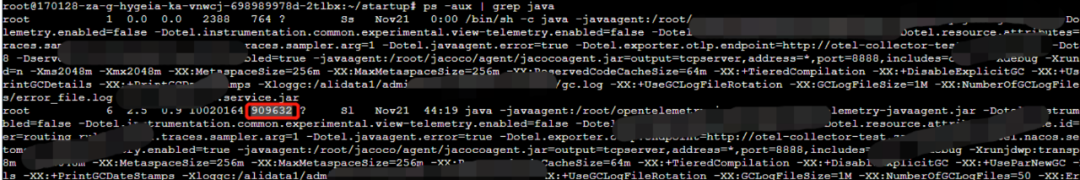

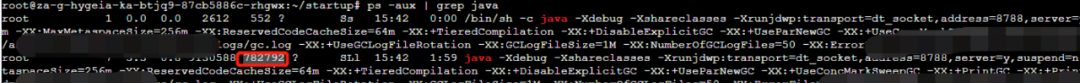

从进程角度检查内存变化。

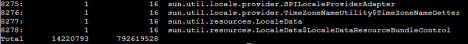

静态验证:

直接比较进程初始时的内存使用率 ps -aux | grep java

HotSpot 效果如下:

初始未负载时进程占用内存大小 888M 左右。

OpenJ9 效果如下:

初始未负载时进程占用内存大小 764M 左右。

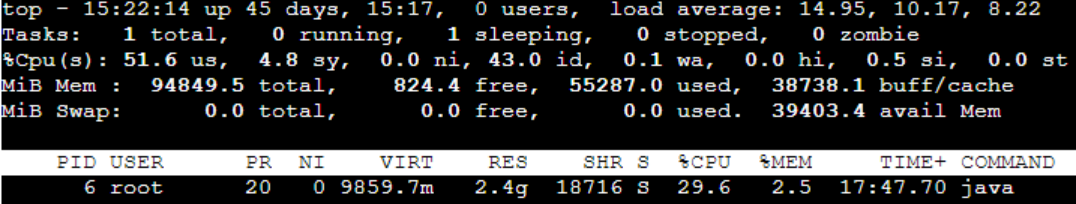

动态验证:

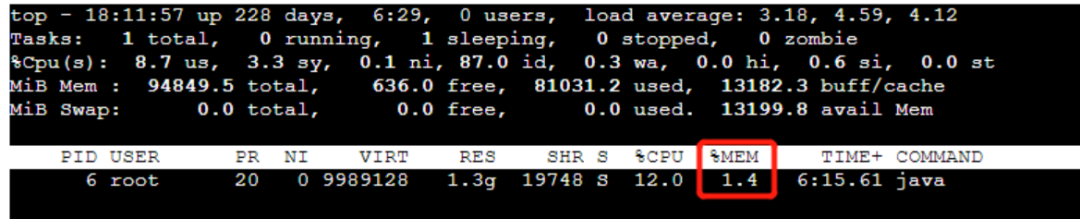

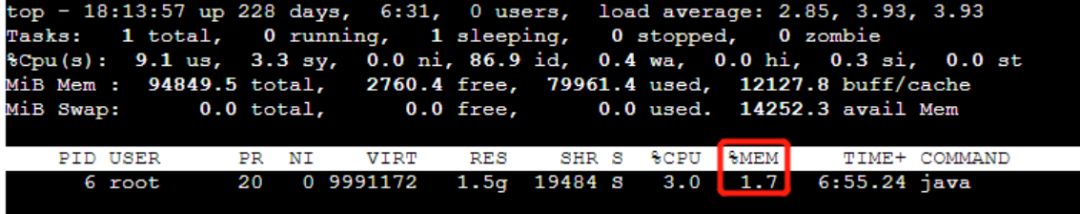

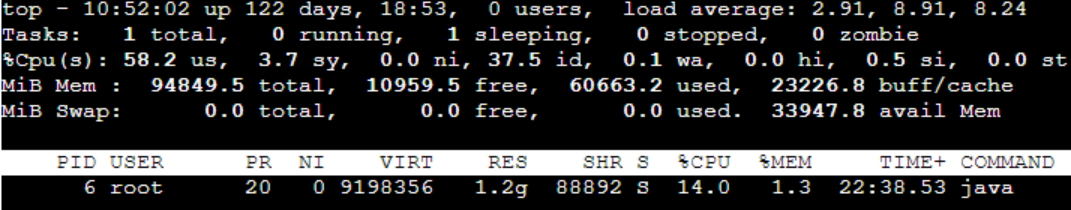

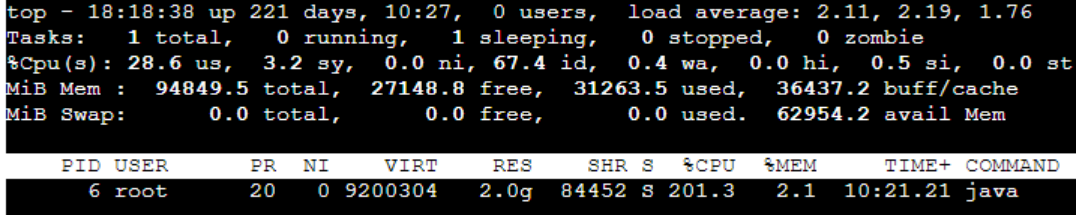

ps -ef | grep java 找到对应 id 执行 top -p id。HotSpot,1W 人的场景下:

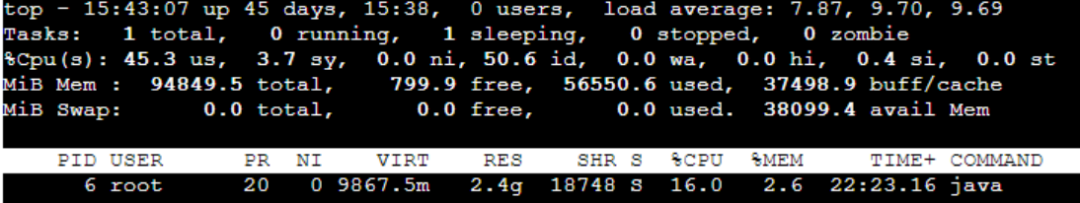

OpenJ9,1W 人的场景下:

1 万人的结论,内存使用率会上升,但是峰值上 OpenJ9 低于 HotSpot,本次测试耗时 8 分钟,和 HotSpot 持平。

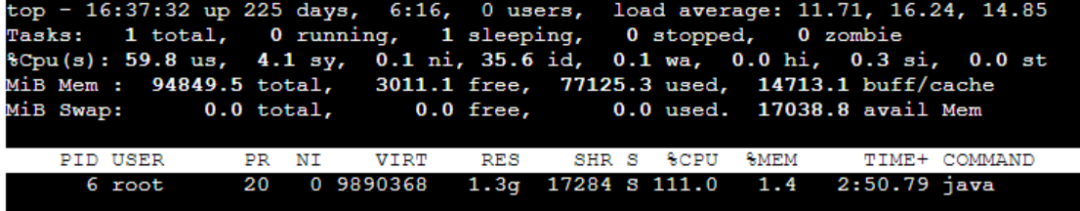

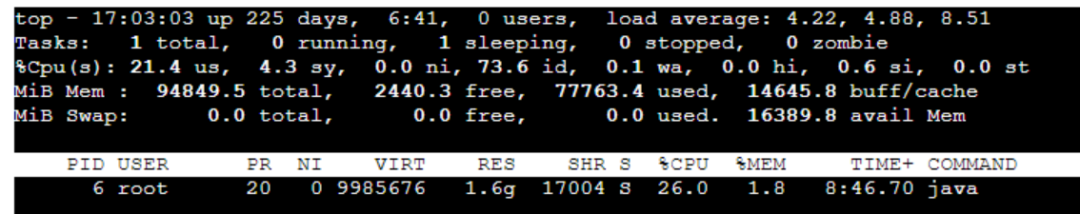

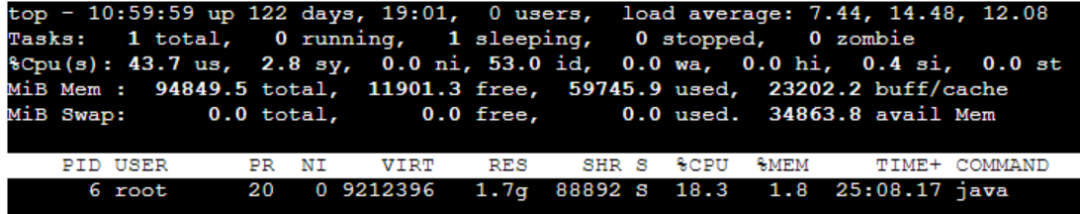

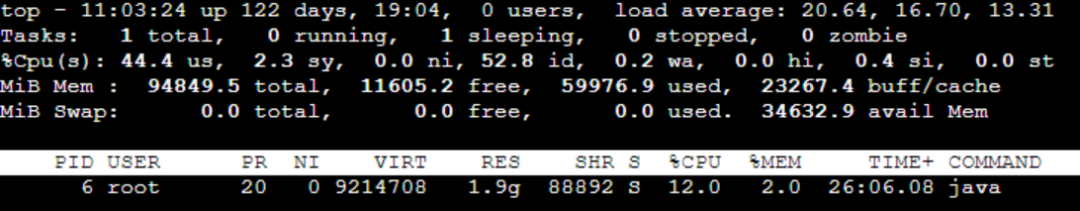

HotSpot,5W 人的场景下:

OpenJ9,5W 人的场景下:

5 万人的结论,峰值上 OpenJ9 低于 HotSpot 但看起来已经比较接近了,和 HotSpot 耗时相比较,依然是多了 10 分钟左右才执行完成。

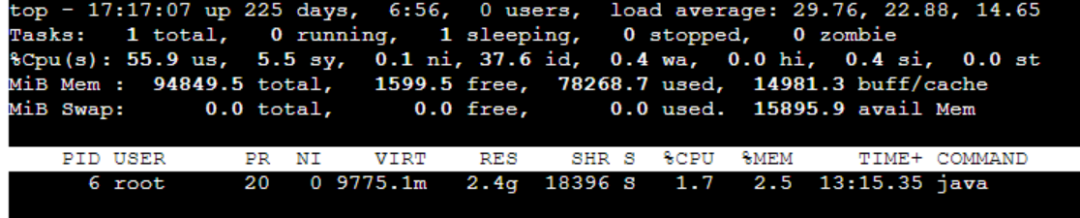

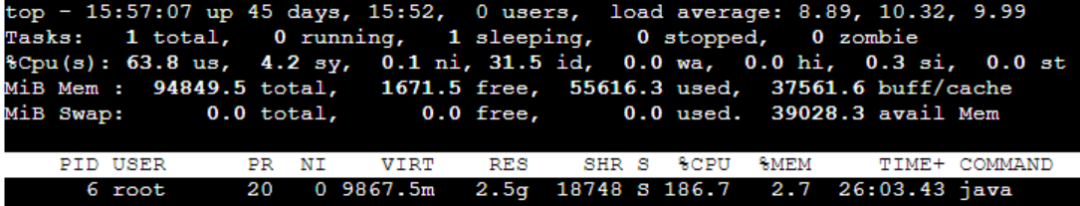

HotSpot,10W 人的场景下:

OpenJ9,10W 人的场景下:图片

结论:

常规场景下(1 万、5 万),OpenJ9 比 HotSpot 更节省内存,节省比例大概在 30% 左右(基于峰值计算);

极端场景下(10 万),OpenJ9 比 HotSpot 依然更节省内存,只是两者都无法正常完成任务处理,HotSpot 表现稳定一些,OpenJ9 出现“假死”进而被判定容器不可用,然后重启。

6、OpenJ9 的节省内存的一点思考

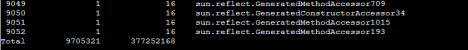

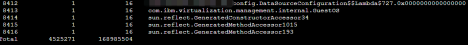

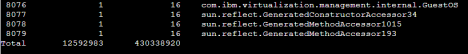

可以看到很多数据和图片都显示 OpenJ9 节省内存,网上暂未查到相关其节省内存的原理介绍,遂从直觉上判断是否其创建对象大小分配上有和 HotSpot 不一样的逻辑。

参考链接 7 中有对应的测试类 SizeOfObject 和对应的测试代码 SizeOfObjectTest,此处不再赘述。

基于 HotSpot 测试的结论和链接中一致:

HotSpot:默认开启指针压缩

sizeOf(new Object())=16sizeOf(new A())=16

sizeOf(new B())=24 -------

sizeOf(new B2())=24 -------

sizeOf(new B[3])=32

sizeOf(new C())=24

fullSizeOf(new C())=128 -------

sizeOf(new D())=32 -------

fullSizeOf(new D())=64 -------

sizeOf(new int[3])=32

sizeOf(new Integer(1)=16

sizeOf(new Integer[0])=16 -------

sizeOf(new Integer[1])=24

sizeOf(new Integer[2])=24

sizeOf(new Integer[3])=32

sizeOf(new Integer[4])=32

sizeOf(new A[3])=32

sizeOf(new E())=24

基于 OpenJ9 测试的结论:

OpenJ9:默认配置

sizeOf(new Object())=16

sizeOf(new A())=16

sizeOf(new B())=16 -------

sizeOf(new B2())=16 -------

sizeOf(new B[3])=32

sizeOf(new C())=24

fullSizeOf(new C())=104 -------

sizeOf(new D())=24 -------

fullSizeOf(new D())=56 -------

sizeOf(new int[3])=32

sizeOf(new Integer(1)=16

sizeOf(new Integer[0])=24 -------

sizeOf(new Integer[1])=24

sizeOf(new Integer[2])=24

sizeOf(new Integer[3])=32

sizeOf(new Integer[4])=32

sizeOf(new A[3])=32

sizeOf(new E())=24

通过这个测试,大致可以知道 18 个 CASE 中,相对 HotSpot,OpenJ9 创建的对象,12 个大小持平,5 个占用内存减少,1 个占用内存增加。这个结论虽不足以支撑 OpenJ9 比 HotSpot 更省内存,但是可以看出其确实不一样的内存大小分配逻辑,大胆猜测一下,这套不一致的实现应该是其节省内存的一个点。

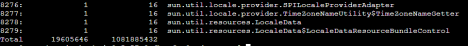

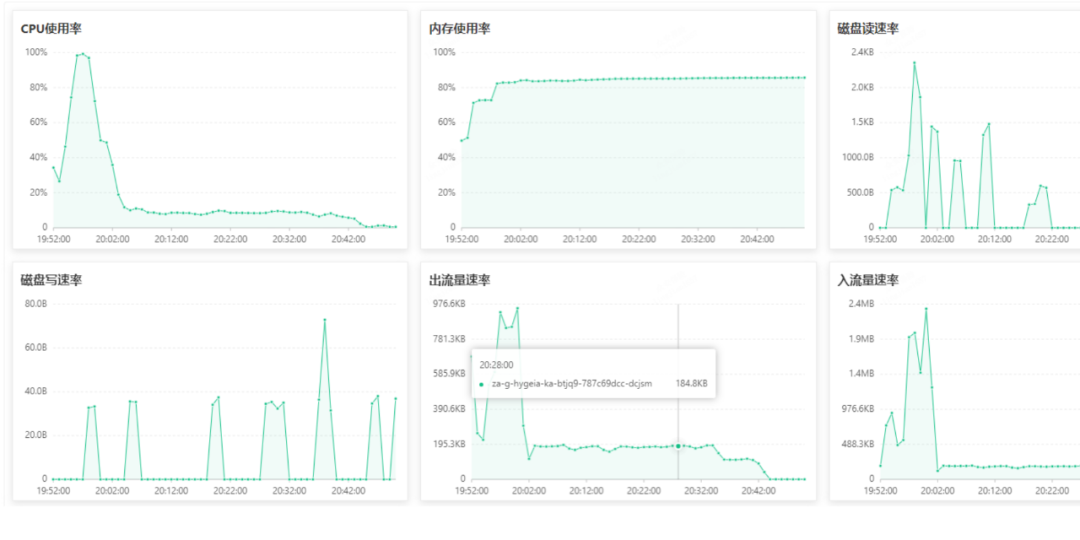

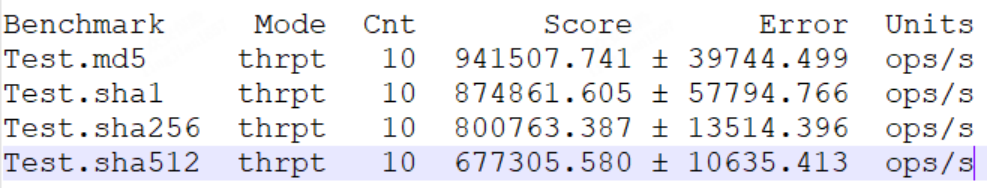

7、OpenJ9 的 BenchMark 测试

到目前为止都是花了比较多的时间在讨论 OpenJ9 内存方面的优势,那它在 CPU 计算层面的表现相对 HotSpot 又是怎样的?下面通过一段 BenchMark 代码测试来验证下。

@State(Scope.Benchmark)

@Fork(2)

public class Test {

public static void main(String[] args) throws RunnerException {

Options options = new OptionsBuilder().include(Test.class.getSimpleName()).build();

new Runner(options).run(); }

@Benchmark

public static void md5() {

DigestUtils.md5Hex(generateStringToHash());

}

@Benchmark

public static void sha1() {

DigestUtils.sha1Hex(generateStringToHash());

}

@Benchmark

public static void sha256() {

DigestUtils.sha256Hex(generateStringToHash());

}

@Benchmark

public static void sha512() {

DigestUtils.sha512Hex(generateStringToHash());

}

public static String generateStringToHash() {

return UUID.randomUUID().toString() + System.currentTimeMillis();

}

}

代码非常简单,分别是几种加密算法,使用的类库是 apache 的 codec,测试条件如下:

Windows 10 64 位

Intel(R) Core(TM) i5-10210U CPU @ 1.60GHz 2.11 GHz

16.0 GB

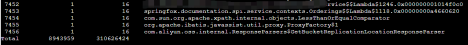

关于 BenchMark 本身,这里也不做更多的阐述,可自行百度。这里对每个方法做了 2 次 Fork,每次 Fork 做 5 次 Warmup 后,再做 5 次 Measurement,得到如下结果:

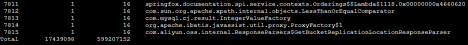

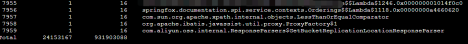

HotSpot:

OpenJ9:

重点关注 Score 列,这里在此次默认的 BenchMark 模式中代表的是吞吐量,结合 Units,即每秒操作多少次,可以看到 OpenJ9 相对 HotSpot 吞吐量还是有下降的。如果单从这个角度看,会对 OpenJ9 的使用持有谨慎的态度,但正如参考链接 9 所述,所有的应用程序不是只做这一件事情,只执行这一段代码。

所以此处准备继续引用一下参考链接 9 来继续描述 BenchMark 的结论。虽然它是基于 Jdk11 来做的测试验证,但我理解这样的结论在目前 Jdk8 中的趋势应该不会改变。

OpenJ9: Switching from HotSpot to OpenJ9 makes ojAlgo faster, ACM slower and EJML fluctuates between roughly the same performance and much slower. It seems OpenJ9 is less stable in its results than HotSpot. Apart from the fluctuations with EJML it widens the gap between the slowest and the fastest code. OpenJ9 makes the fast even faster and the slow even slower............

The speed differences shown here are significant! Regardless of library and matrix size, performance could be halved or doubled by choosing another JVM. Looking at combinations of libraries and JVM:s there is an order of magnitude in throughput to be gained by choosing the right combination.This will not translate to an entire system/application being this much slower or faster – matrix multiplication is most likely not the only thing it does. But, perhaps you should test how whatever you’re working on performs with a different JVM.

大致翻译一下。

OpenJ9:从 HotSpot 切换到 OpenJ9 使 ojAlgo 更快,ACM 更慢,EJML 在大致相同的性能和更慢的性能之间波动。OpenJ9 的结果似乎不如 HotSpot 稳定。除了 EJML 的波动之外,它还扩大了最慢代码和最快代码之间的差距。OpenJ9 使快的更快,慢的更慢。

这里显示的速度差异是显著的!不管库和矩阵的大小如何,选择另一个 JVM 可以使性能减半或加倍。看看库和 JVM 的组合,通过选择正确的组合可以获得一个数量级的吞吐量。

这将不会转化为整个系统/应用程序如此慢或如此快——矩阵乘法很可能不是它唯一做的事情。但是,也许您应该测试您正在处理的任何东西在不同的 JVM 上的执行情况。

附上测试结论数据:

HotSpot:"Benchmark","Mode","Threads","Samples","Score","Score Error (99.9%)","Unit","Param: dim","Param: lib"

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,34413.970020,24755.445712,"ops/min",100,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,85465.595631,7158.774782,"ops/min",100,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,89211.858013,12889.194973,"ops/min",100,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,106731.255464,3657.831785,"ops/min",100,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,10080.519404,3421.528136,"ops/min",150,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,25882.406843,1133.754908,"ops/min",150,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,30965.104808,1835.050624,"ops/min",150,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,29878.718059,874.431921,"ops/min",150,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,4008.914571,2797.192776,"ops/min",200,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,10926.632411,1152.009522,"ops/min",200,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,15404.968617,725.142308,"ops/min",200,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,12666.691647,612.797881,"ops/min",200,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,763.748592,30.917436,"ops/min",350,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,2032.841238,70.656040,"ops/min",350,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,3251.322968,209.402892,"ops/min",350,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,2659.340962,506.193983,"ops/min",350,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,255.822643,59.195722,"ops/min",500,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,696.803016,43.103859,"ops/min",500,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,1081.090826,732.926965,"ops/min",500,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,912.348219,179.074511,"ops/min",500,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,80.205124,10.632867,"ops/min",750,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,206.216518,13.924279,"ops/min",750,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,309.858960,6.985213,"ops/min",750,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,272.868116,15.091327,"ops/min",750,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,25.378833,30.930618,"ops/min",1000,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,85.617121,2.286021,"ops/min",1000,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,132.171220,4.568624,"ops/min",1000,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,116.064807,9.837388,"ops/min",1000,MTJ

OpenJ9:

"Benchmark","Mode","Threads","Samples","Score","Score Error (99.9%)","Unit","Param: dim","Param: lib"

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,14683.462390,538.793271,"ops/min",100,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,80105.353723,5219.442243,"ops/min",100,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,128290.832434,19202.038430,"ops/min",100,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,117431.360792,25258.942865,"ops/min",100,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,4369.110255,1370.251546,"ops/min",150,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,21840.781985,761.252770,"ops/min",150,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,48608.703009,32934.365969,"ops/min",150,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,31828.012559,13729.359128,"ops/min",150,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,1712.217195,41.493227,"ops/min",200,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,3227.834335,282.717723,"ops/min",200,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,25597.291062,6989.989282,"ops/min",200,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,14169.687774,432.516135,"ops/min",200,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,280.190365,29.736756,"ops/min",350,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,1936.031548,544.372429,"ops/min",350,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,6004.867771,1183.160078,"ops/min",350,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,2652.624079,619.430766,"ops/min",350,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,175.313429,5.764318,"ops/min",500,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,691.723102,15.554568,"ops/min",500,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,2133.663163,298.117681,"ops/min",500,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,922.513268,27.892900,"ops/min",500,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,28.178703,109.654076,"ops/min",750,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,43.375818,0.623678,"ops/min",750,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,633.488067,226.129149,"ops/min",750,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,275.391125,10.876657,"ops/min",750,MTJ

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,3.896976,38.325441,"ops/min",1000,ACM

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,17.879150,0.109833,"ops/min",1000,EJML

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,265.467924,6.376378,"ops/min",1000,ojAlgo

"org.ojalgo.benchmark.lab.FillByMultiplying.execute","thrpt",1,3,106.020520,115.325279,"ops/min",1000,MTJ

8、切换前的一些总结、想法

1、尝试 OpenJ9 的使用,应从级别低、相对边缘、内存密集型应用开始,前面所说的级别低和相对边缘比较符合感性认识,但从理性认识,即不如 HotSpot 稳定这个层面,结论也是一致,后面所说的内存密集型应用,是从 OpenJ9 本身的特点来看的;

2、线上切换建议先不做任何 JVM 的配置改动,先执行一段时间,拿到 OpenJ9 的峰值后,再调整配置,调整的值建议在峰值的基础上上浮 10%,避免拿到的是假峰值情况;

9、线上使用遇到的问题

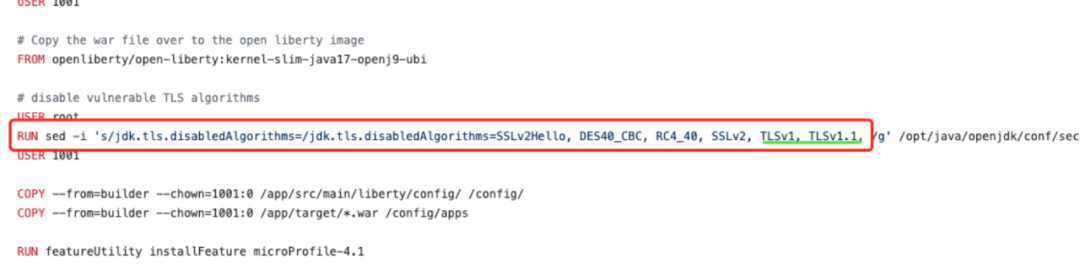

Q:某应用使用了 TLSv1、TLSv1.1,OpenJ9 默认禁用了这两个加解密算法,导致部分业务受影响

A:平台架构部目前给的解决方案如下:jdk.tls.disabledAlgorithms 的配置在较新版本的 jdk 中都是将 TLSv1, TLSv1.1 等默认禁用掉了。OpenJ9 也是一样,如果你想要启用的话,试试在应用的 dockerfile 中修改 java.security 文件,类似:RUN sed - 's/jdk.tls.disabledAlgorithms=SSLv3, TLSv1, TLSv1.1, RC4, DES, MD5withRSA,/jdk.tls.disabledAlgorithms=SSLv3, /g' /opt/java/openjdk/jre/lib/security/java.security 后续的话我们会讨论下这部分相关的支持和后续的方案。https://github.com/IBM/java-liberty-app/blob/master/Dockerfile

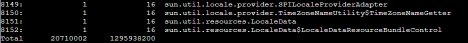

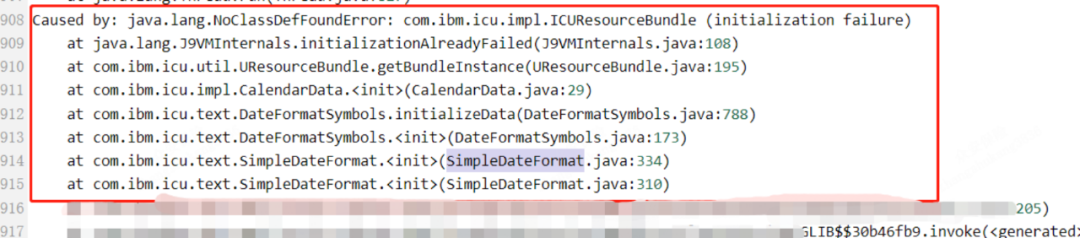

Q:某应用使用了 icu4j 框架,涉及到调用点执行报错,截图如下:

A:icu4j.jar 3.4.4 版本于 06 年发布,版本过低,建议使用 JDK 自带的工具类或者升级该版本。如果应用使用了 icu4j,需注意可能引发的风险。

10、线上使用成果反馈

文末再同步一下笔者所在部门的 OpenJ9 线上切换阶段性成果,首批 23 个应用于春节前完成了切换,涉及相关 POD 节点数量 63 个,总内存 125G,使用内存减少 54G,节省比例达 40% 多,这一比例应该是比上述调研的 30%要更优秀。截止笔者发文,切换的应用也暂未有相关故障产生,表现稳定。

参考资料:

1、https://www.bilibili.com/video/BV1t54y1t7JY/?spm_id_from=333.337.search-card.all.click(PART I)

2、https://www.eclipse.org/openj9/

3、https://www.eclipse.org/openj9/docs/

4、https://www.codenong.com/d-class-sharing-in-eclipse-openj9/

5、https://www.codenong.com/d-class-sharing-in-eclipse-openj9-how-to-improve-mem/

6、https://blog.51cto.com/u_14634606/2478821

7、https://www.cnblogs.com/zhanjindong/p/3757767.html

9、https://www.ojalgo.org/2019/02/quick-test-to-compare-hotspot-and-openj9/